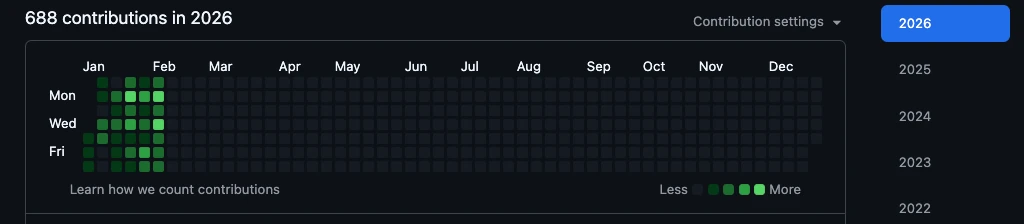

Current, 2026

Saturday, February 14

February

It’s been a busy start to the year. The weather in New York has been extremely cold in the past month. Today’s high is -9°C and the low is -17°C with gusts making it feel like -26°C. The last two weeks have been extremely busy and productive. I have been working on multiple projects at work, and have been heads down on building.

Running and scaling Luna has been good. Speaking to a couple of potential co-founders and trying out (gambling) a few ideas. The process is fun, but extremely exhausting. Will write about all of this in more detail.

In the current economy, where tech starts underperforming NASDAQ, I have no idea what to expect in the next few weeks. A couple of models later, I think everything I do and think will be obsolete. I’d have to hunt for new jobs. I might try out astrophysics, or philosophy, or maybe even consider farming. Claude and GPT will be taking over the world anytime now.

After the dust settles, I’d like to leave and explore the world for a bit. Spend some time vacationing somewhere warm.

January

Happy New Year! Kicking off 2026 with a renewed focus on personal growth and exploration. The new:

- Authentic is now live on both app stores. Excited to see how you guys engage with it! (iOS | Android)

- Tried snowboarding for the first time. Loved it. Planning to visit the mountains more often this year. Goal is to do a black diamond run in a year.

- Back to focusing on fitness. Set a goal to reach a certain body-fat percentage by mid-year.

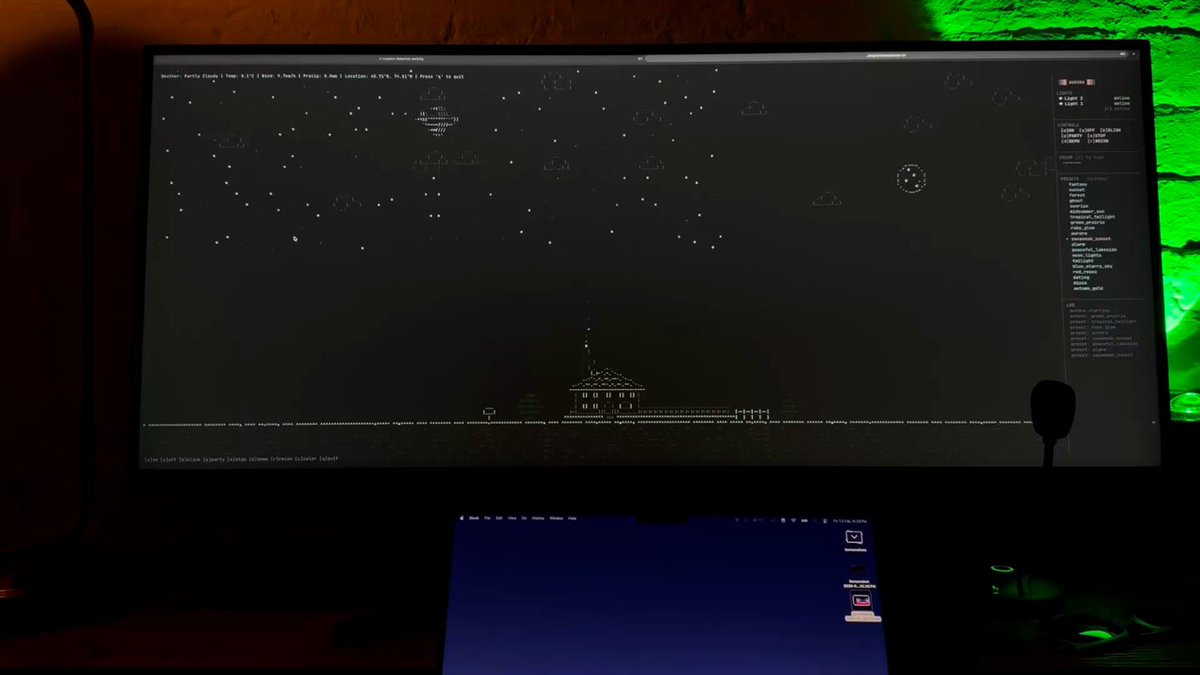

- Engaged in a few exciting projects at work, including a new AI initiative plus a community platform. More details to come soon.

In other news, excited to launch Luna, my venture studio! Luna is one month old, already generating revenue, has multiple projects in the pipeline, and is setting up a venture of its own. Looking forward to sharing more updates soon.